Subsumption architecture is a reactive robotic architecture heavily associated with behavior-based robotics which was very popular in the 1980s and 90s. The term was introduced by Rodney Brooks and colleagues in 1986. Subsumption has been widely influential in autonomous robotics and elsewhere in real-time AI.

Overview

Subsumption architecture is a control architecture that was proposed in opposition to traditional AI, or GOFAI. Instead of guiding behavior by symbolic mental representations of the world, subsumption architecture couples sensory information to action selection in an intimate and bottom-up fashion.

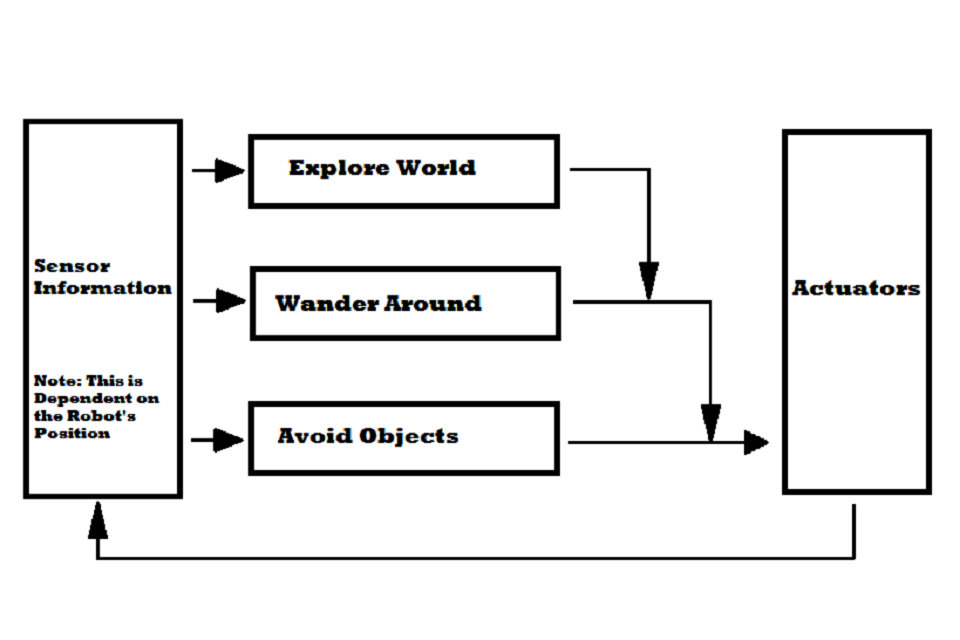

It does this by decomposing the complete behavior into sub-behaviors. These sub-behaviors are organized into a hierarchy of layers. Each layer implements a particular level of behavioral competence, and higher levels are able to subsume lower levels (= integrate/combine lower levels to a more comprehensive whole) in order to create viable behavior. For example, a robot’s lowest layer could be “avoid an object”. The second layer would be “wander around”, which runs beneath the third layer “explore the world”. Because a robot must have the ability to “avoid objects” in order to “wander around” effectively, the subsumption architecture creates a system in which the higher layers utilize the lower-level competencies. The layers, which all receive sensor-information, work in parallel and generate outputs. These outputs can be commands to actuators, or signals that suppress or inhibit other layers.

Properties

Physicality

Agents are somehow embodied in the environment, they have a body with their sensors and actuators, and they perceive and react to changes in the environment.

Situation

Agents are part of their real world environment with which they interact. This affects the agent’s behavior.

Intelligence

Intelligence of the agent is predominantly formed by the agent’s collision with the real environment, his reactions and stimuli.

Emergence The

intelligence of the system, as a whole, arises in an emergent way, that is, the interaction of its individual parts.

No explicit knowledge representation

Subsoup architecture does not have an explicit model of the world. This means that the robot does not have a simplified overview of the surrounding world. The benefits are obvious. Agents do not make predictions about the world, so they can work very well in an unpredictable environment. Save time for reading and writing. It also saves the time of the algorithm used by this model. There will also be problems with a world model that does not have to match the real world. But it also has its disadvantages. The system is then purely reactive. This means that it only responds to what’s happening in the world. In other words, the world determines what it wants to do.

Distributing

Behavior is spread out between finite automata that performs various tasks. As a result, they can respond to competitive events in the environment. Total behavior is the approximate sum of the finite automata. Subsystem architecture is parallel and asynchronous because finite automata operate independently of each other. All final machines are running constantly, and since they can have their own timing, they do not need synchronization.

Layering

Subsurface architecture consists of layers where each one implements a certain behavior and consists of one or more finite automata. Subsystem architecture is considered modular because each finite automaton performs an independent task. Each final machine has a number of input and output lines. Final machines are processors that send messages to each other and are able to store data structures. Processors work asynchronously and are equally mutually equivalent. They monitor their inputs and send reports to outputs. The final machine always takes into account the latest message received. For this reason, the message may be lost if the new message arrives before the older one has been processed. There is no control within the layer, and there is no other form of communication between processors, namely there is no shared memory. Each module only cares about its task. Higher layers have access to sensors and affect the behavior of lower layers by limiting inputs or by suppressing outputs of certain finite automata at lower levels.

This is the mechanism by which higher layers include the role of the lower layers.

Navigation

The most important agent ability based on subsumption architecture is to move around the world and avoid moving and moving objects. Navigation is one of the main tasks that agents have to do, and this task is extremely reactive (compared to other cognitive agents ). Navigation is mostly done in several layers. The lowest module avoids objects (even for those who suddenly appear) and the higher level leads the agent in a certain direction and ignores the obstacles. This combination provides an easy way to get from A to B without explicit route planning.

Other

Agents operate in real time and are primarily designed to move in a real, dynamic, and complex world.

Reasons for Origin

One of the reasons for the creation of subsumption architecture was the fact that the authors wanted to deal with the following requirements and needs for the creation of autonomous mobile robots.

Many Goals

The robot can have more goals that are conflicting with each other and need to be addressed in some way. For example, a robot can try to reach a certain point in front of him and at the same time he needs to avoid obstacles that are on the way. It also needs to get to some place in the shortest possible time and at the same time have to save their energy resources. It is therefore clear that the importance and priority of the objectives is relative and contextually dependent. It is certainly more important to pull off the tracks when the train goes, before checking the track sleepers at the time. The control system must prioritize priority objectives but at the same time it is necessary to achieve even lower priority targets (when the robot shifts off the track – it is also important to ensure that it does not lose its stability and does not fall).

More sensors

The robot can have multiple sensors (cameras, infrared sensors, acoustic sensors, etc.). All sensors may potentially err. Additionally, there is often no direct analytical relationship between sensor values and required physical quantities. Some sensors may overlap in those areas they measure. Inconsistent readings can often occur – sometimes due to sensor failure and sometimes due to measurement conditions – if the sensor is used outside its scope. Often, precise analytical characteristics of the scope are not available. The robot must be able to make the decision under the above conditions.

Robustness

The robot must be robust. When some sensors fail, they must be able to adapt and deal with relying only on those that work. When the environment changes drastically, it should still be able to attain some reasonable behavior – rather than staying in shock or aimlessly and nonsensically wandering around. It is also appropriate if it can continue if errors occur on its processors.

Scalability

When more robots are added to the robot, they need more processing capacity, otherwise their original capabilities may be disturbed over time.

Goal

Subsumption architecture attacks the problem of intelligence from a significantly different perspective than traditional AI. Disappointed with the performance of Shakey the robot and similar conscious mind representation-inspired projects, Rodney Brooks started creating robots based on a different notion of intelligence, resembling unconscious mind processes. Instead of modelling aspects of human intelligence via symbol manipulation, this approach is aimed at real-time interaction and viable responses to a dynamic lab or office environment.

The goal was informed by four key ideas:

Situatedness – A major idea of situated AI is that a robot should be able to react to its environment within a human-like time-frame. Brooks argues that situated mobile robot should not represent the world via an internal set of symbols and then act on this model. Instead, he claims that “the world is its own best model”, which means that proper perception-to-action setups can be used to directly interact with the world as opposed to modelling it. Yet, each module/behavior still models the world, but on a very low level, close to the sensorimotor signals. These simple models necessarily use hardcoded assumptions about the world encoded in the algorithms themselves, but avoid the use of memory to predict the world’s behavior, instead relying on direct sensorial feedback as much as possible.

Embodiment – Brooks argues building an embodied agent accomplishes two things. The first is that it forces the designer to test and create an integrated physical control system, not theoretic models or simulated robots that might not work in the physical world. The second is that it can solve the symbol grounding problem, a philosophical issue many traditional AIs encounter, by directly coupling sense-data to meaningful actions. “The world grounds regress,” and the internal relation of the behavioral layers are directly grounded in the world the robot perceives.

Intelligence – Looking at evolutionary progress, Brooks argues that developing perceptual and mobility skills are a necessary foundation for human-like intelligence. Also, by rejecting top-down representations as a viable starting point for AI, it seems that “intelligence is determined by the dynamics of interaction with the world.”

Emergence – Conventionally, individual modules are not considered intelligent by themselves. It is the interaction of such modules, evaluated by observing the agent and its environment, that is usually deemed intelligent (or not). “Intelligence,” therefore, “is in the eye of the observer.”

The ideas outlined above are still a part of an ongoing debate regarding the nature of intelligence and how the progress of robotics and AI should be fostered.

Layers and augmented finite-state machines

Each layer is made up by a set of processors that are augmented finite-state machines (AFSM), the augmentation being added instance variables to hold programmable data-structures. A layer is a module and is responsible for a single behavioral goal, such as “wander around.” There is no central control within or between these behavioral modules. All AFSMs continuously and asynchronously receive input from the relevant sensors and send output to actuators (or other AFSMs). Input signals that are not read by the time a new one is delivered end up getting discarded. These discarded signals are common, and is useful for performance because it allows the system to work in real time by dealing with the most immediate information.

Because there is no central control, AFSMs communicate with each other via inhibition and suppression signals. Inhibition signals block signals from reaching actuators or AFSMs, and suppression signals blocks or replaces the inputs to layers or their AFSMs. This system of AFSM communication is how higher layers subsume lower ones, as well as how the architecture deals with priority and action selection arbitration in general.

The development of layers follows an intuitive progression. First the lowest layer is created, tested, and debugged. Once that lowest level is running, one creates and attaches the second layer with the proper suppression and inhibition connections to the first layer. After testing and debugging the combined behavior, this process can be repeated for (theoretically) any number of behavioral modules.

Characteristics

Acknowledging the above, the authors have decided to follow the procedure that forms the basis of subsumption architecture. They dealt with the problem of creating the robot vertically based on the desired external manifestations of the control system rather than the internal robot operation. Then they defined so-called competency levels. Competency level is the specification of the required level of robot behavior with respect to all environments in which it is moving. A higher level of competence means a more specific level of behavior. The main idea of competence levels is that it is possible to create layers of the control system that correspond to a given level of competence and simply add a new layer to an existing set.

R. Brooks and his team defined the following levels of competence in 1986:

Avoid contact with objects (no matter if they are moving or stationary)

1. Wake aimlessly without clashing with objects

2. “Explore” the world by searching for places within reach

3. Build a map of the environment and plan your journey from one place to another

4. Record changes in static environments

5. Consider the world in terms of identifiable objects and perform the tasks associated with these objects

6. formulate and implement plans that require a change in the state of the world in a desirable way

7. Consider the behavior of objects in the world and modify accordingly

The authors first built a complete robot control system that achieves zero level competencies and thoroughly scrutinized. Another layer named the first level of the control system. It is able to handle data from the zero layer of the system, and it is also allowed to insert data into the zero-level internal interfaces, which limits the normal bitrate. The principle is that the zero layer continues to run and does not know anything about the layer over it sometimes interfering with data paths. This layer is able to reach the first level competencies with the help of the zero layer. The same process is repeated to achieve a higher level of competence. At the moment the first layer was created, a functioning part of the control system is already available. Additional layers can be added later and the system may not be altered. The authors argue that this architecture naturally leads to solving the problems of mobile robots that were plotted at the beginning of this section as follows.

Many goals

Individual layers can work on individual goals concurrently. The suppression mechanism then mediates the actions that are taken into account. The advantage is that there may not be a prior decision on which goal to take.

Multiple sensors

All sensors may not be part of a central representation. Only a sensor readout can be added to the central representation, which is identified as extremely reliable. At the same time, however, the sensor values may be used by the robot. Other layers can process and use the results to achieve their own goals, regardless of how different layers are dealt with.

Robustness

The existence of multiple sensors obviously adds to the robustness of the system when their results can be reasonably used. There is another source of robustness in subsumption architecture. Lower layers that have been well-proven continue to run even if higher layers are added. Because a higher layer can only suppress lower layer outputs by actively interfering with alternate data, and in cases where it can not produce results in an appropriate way, lower levels will still produce results that are reasonable, even at a lower level of competence.

Extensibility

An obvious way to deal with scalability is to let each new layer run on its own processor.

Robots

The following is a small list of robots that utilize the subsumption architecture.

Allen (robot)

Herbert, a soda can collecting robot

Genghis, a robust hexapodal walker

The above are described in detail along with other robots in Elephants Don’t Play Chess.

Strengths and weaknesses

The main advantages of the architecture are:

the emphasis on iterative development and testing of real-time systems in their target domain;

the emphasis on connecting limited, task-specific perception directly to the expressed actions that require it; and

the emphasis on distributive and parallel control, thereby integrating the perception, control, and action systems in a manner similar to animals.

The main disadvantages of the architecture are:

the difficulty of designing adaptable action selection through highly distributed system of inhibition and suppression;

the lack of large memory and symbolic representation, which seems to restrict the architecture from understanding language;

When subsumption architecture was developed, the novel setup and approach of subsumption architecture allowed it to be successful in many important domains where traditional AI had failed, namely real-time interaction with a dynamic environment. The lack of large memory storage, symbolic representations, and central control, however, places it at a disadvantage at learning complex actions, in-depth mapping, and understanding language.

Extension

In 1989, Brooks developed subsumption architecture, mainly in the field of input limitation and suppressing outputs by finite automata. In 1991 Brooks came up with the implementation of the hormonal system. Individual layers should be suppressed or limited by the presence or absence of the hormone. In 1992 Mataric comes up with the idea of architecture based on behavior. This architecture should address the lack of explicit representation of the world without losing robustness and reactivity.

Source from Wikipedia