Structural health monitoring (SHM) refers to the process of implementing a damage detection and characterization strategy for engineering structures. Here damage is defined as changes to the material and/or geometric properties of a structural system, including changes to the boundary conditions and system connectivity, which adversely affect the system’s performance. The SHM process involves the observation of a system over time using periodically sampled dynamic response measurements from an array of sensors, the extraction of damage-sensitive features from these measurements, and the statistical analysis of these features to determine the current state of system health. For long term SHM, the output of this process is periodically updated information regarding the ability of the structure to perform its intended function in light of the inevitable aging and degradation resulting from operational environments. After extreme events, such as earthquakes or blast loading, SHM is used for rapid condition screening and aims to provide, in near real time, reliable information regarding the integrity of the structure. Infrastructure inspection plays a key role in public safety in regards to both long-term damage accumulation and post extreme event scenarios. As part of the rapid developments in data-driven technologies that are transforming many fields in engineering and science, machine learning and computer vision techniques are increasingly capable of reliably diagnosing and classifying patterns in image data, which has clear applications in inspection contexts.

Introduction

Qualitative and non-continuous methods have long been used to evaluate structures for their capacity to serve their intended purpose. Since the beginning of the 19th century, railroad wheel-tappers have used the sound of a hammer striking the train wheel to evaluate if damage was present. In rotating machinery, vibration monitoring has been used for decades as a performance evaluation technique. Two techniques in the field of SHM are wave propagation based techniques Raghavan and Cesnik and vibration based techniques. Broadly the literature for vibration based SHM can be divided into two aspects, the first wherein models are proposed for the damage to determine the dynamic characteristics, also known as the direct problem, for example refer, Unified Framework and the second, wherein the dynamic characteristics are used to determine damage characteristics, also known as the inverse problem, for example refer. In the last ten to fifteen years, SHM technologies have emerged creating an exciting new field within various branches of engineering. Academic conferences and scientific journals have been established during this time that specifically focus on SHM. These technologies are currently becoming increasingly common.

Statistical pattern recognition

The SHM problem can be addressed in the context of a statistical pattern recognition paradigm. This paradigm can be broken down into four parts: (1) Operational Evaluation, (2) Data Acquisition and Cleansing, (3) Feature Extraction and Data Compression, and (4) Statistical Model Development for Feature Discrimination. When one attempts to apply this paradigm to data from real world structures, it quickly becomes apparent that the ability to cleanse, compress, normalize and fuse data to account for operational and environmental variability is a key implementation issue when addressing Parts 2-4 of this paradigm. These processes can be implemented through hardware or software and, in general, some combination of these two approaches will be used.

Health assessment of engineered structures of bridges, buildings and other related infrastructures

Commonly known as Structural Health Assessment (SHA) or SHM, this concept is widely applied to various forms of infrastructures, especially as countries all over the world enter into an even greater period of construction of various infrastructures ranging from bridges to skyscrapers. Especially so when damages to structures are concerned, it is important to note that there are stages of increasing difficulty that require the knowledge of previous stages, namely:

Detecting the existence of the damage on the structure

Locating the damage

Identifying the types of damage

Quantifying the severity of the damage

It is necessary to employ signal processing and statistical classification to convert sensor data on the infrastructural health status into damage info for assessment.

Operational evaluation

Operational evaluation attempts to answer four questions regarding the implementation of a damage identification capability:

i) What are the life-safety and/or economic justification for performing the SHM?

ii) How is damage defined for the system being investigated and, for multiple damage possibilities, which cases are of the most concern?

iii) What are the conditions, both operational and environmental, under which the system to be monitored functions?

iv) What are the limitations on acquiring data in the operational environment?

Operational evaluation begins to set the limitations on what will be monitored and how the monitoring will be accomplished. This evaluation starts to tailor the damage identification process to features that are unique to the system being monitored and tries to take advantage of unique features of the damage that is to be detected.

Data acquisition, normalization and cleansing

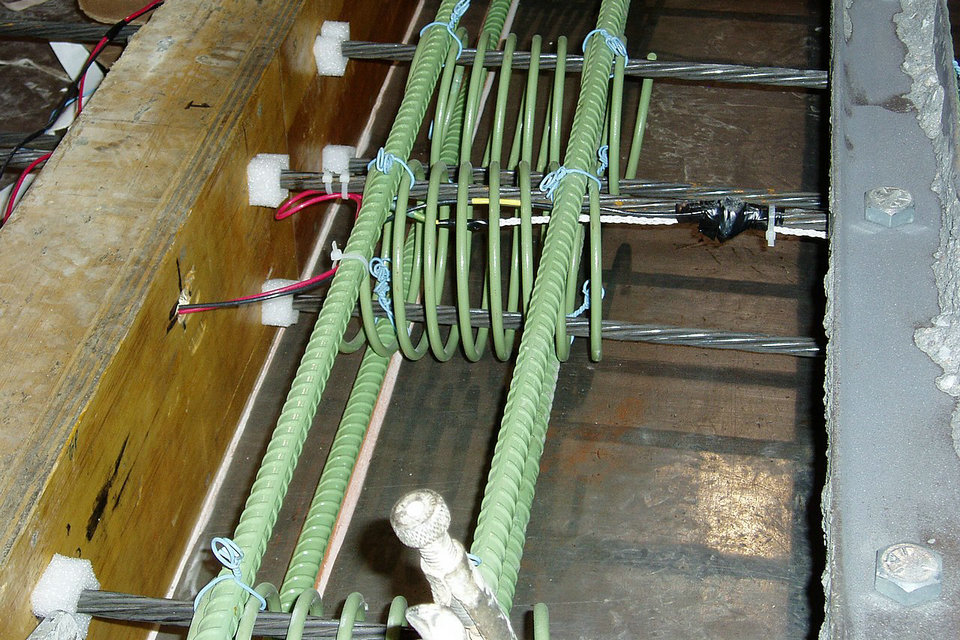

The data acquisition portion of the SHM process involves selecting the excitation methods, the sensor types, number and locations, and the data acquisition/storage/transmittal hardware. Again, this process will be application specific. Economic considerations will play a major role in making these decisions. The intervals at which data should be collected is another consideration that must be addressed.

Because data can be measured under varying conditions, the ability to normalize the data becomes very important to the damage identification process. As it applies to SHM, data normalization is the process of separating changes in sensor reading caused by damage from those caused by varying operational and environmental conditions. One of the most common procedures is to normalize the measured responses by the measured inputs. When environmental or operational variability is an issue, the need can arise to normalize the data in some temporal fashion to facilitate the comparison of data measured at similar times of an environmental or operational cycle. Sources of variability in the data acquisition process and with the system being monitored need to be identified and minimized to the extent possible. In general, not all sources of variability can be eliminated. Therefore, it is necessary to make the appropriate measurements such that these sources can be statistically quantified. Variability can arise from changing environmental and test conditions, changes in the data reduction process, and unit-to-unit inconsistencies.

Data cleansing is the process of selectively choosing data to pass on to or reject from the feature selection process. The data cleansing process is usually based on knowledge gained by individuals directly involved with the data acquisition. As an example, an inspection of the test setup may reveal that a sensor was loosely mounted and, hence, based on the judgment of the individuals performing the measurement, this set of data or the data from that particular sensor may be selectively deleted from the feature selection process. Signal processing techniques such as filtering and re-sampling can also be thought of as data cleansing procedures.

Finally, the data acquisition, normalization, and cleansing portion of SHM process should not be static. Insight gained from the feature selection process and the statistical model development process will provide information regarding changes that can improve the data acquisition process.

Feature extraction and data compression

The area of the SHM process that receives the most attention in the technical literature is the identification of data features that allows one to distinguish between the undamaged and damaged structure. Inherent in this feature selection process is the condensation of the data. The best features for damage identification are, again, application specific.

One of the most common feature extraction methods is based on correlating measured system response quantities, such a vibration amplitude or frequency, with the first-hand observations of the degrading system. Another method of developing features for damage identification is to apply engineered flaws, similar to ones expected in actual operating conditions, to systems and develop an initial understanding of the parameters that are sensitive to the expected damage. The flawed system can also be used to validate that the diagnostic measurements are sensitive enough to distinguish between features identified from the undamaged and damaged system. The use of analytical tools such as experimentally-validated finite element models can be a great asset in this process. In many cases the analytical tools are used to perform numerical experiments where the flaws are introduced through computer simulation. Damage accumulation testing, during which significant structural components of the system under study are degraded by subjecting them to realistic loading conditions, can also be used to identify appropriate features. This process may involve induced-damage testing, fatigue testing, corrosion growth, or temperature cycling to accumulate certain types of damage in an accelerated fashion. Insight into the appropriate features can be gained from several types of analytical and experimental studies as described above and is usually the result of information obtained from some combination of these studies.

The operational implementation and diagnostic measurement technologies needed to perform SHM produce more data than traditional uses of structural dynamics information. A condensation of the data is advantageous and necessary when comparisons of many feature sets obtained over the lifetime of the structure are envisioned. Also, because data will be acquired from a structure over an extended period of time and in an operational environment, robust data reduction techniques must be developed to retain feature sensitivity to the structural changes of interest in the presence of environmental and operational variability. To further aid in the extraction and recording of quality data needed to perform SHM, the statistical significance of the features should be characterized and used in the condensation process.

Statistical model development

The portion of the SHM process that has received the least attention in the technical literature is the development of statistical models for discrimination between features from the undamaged and damaged structures. Statistical model development is concerned with the implementation of the algorithms that operate on the extracted features to quantify the damage state of the structure. The algorithms used in statistical model development usually fall into three categories. When data are available from both the undamaged and damaged structure, the statistical pattern recognition algorithms fall into the general classification referred to as supervised learning. Group classification and regression analysis are categories of supervised learning algorithms. Unsupervised learning refers to algorithms that are applied to data not containing examples from the damaged structure. Outlier or novelty detection is the primary class of algorithms applied in unsupervised learning applications. All of the algorithms analyze statistical distributions of the measured or derived features to enhance the damage identification process.

Fundamental axioms

Based on the extensive literature that has developed on SHM over the last 20 years, it can be argued that this field has matured to the point where several fundamental axioms, or general principles, have emerged. The axioms are listed as follows:

Axiom I: All materials have inherent flaws or defects;

Axiom II: The assessment of damage requires a comparison between two system states;

Axiom III: Identifying the existence and location of damage can be done in an unsupervised learning mode, but identifying the type of damage present and the damage severity can generally only be done in a supervised learning mode;

Axiom IVa: Sensors cannot measure damage. Feature extraction through signal processing and statistical classification is necessary to convert sensor data into damage information;

Axiom IVb: Without intelligent feature extraction, the more sensitive a measurement is to damage, the more sensitive it is to changing operational and environmental conditions;

Axiom V: The length- and time-scales associated with damage initiation and evolution dictate the required properties of the SHM sensing system;

Axiom VI: There is a trade-off between the sensitivity to damage of an algorithm and its noise rejection capability;

Axiom VII: The size of damage that can be detected from changes in system dynamics is inversely proportional to the frequency range of excitation.

Source from Wikipedia